Poking around the classic MacOS has been an interesting experiment.

One of the things I find remarkable, brilliant, and rather lovely is getting the old Macs to boot! Seems like just about anything with a usable system folder and a means for achieving block I/O from it will boot. Compared to mucking with MBR based chain loading schemes and infernally buggy BIOS this has been a good plus. Offsetting that is how Apple’s partitioning tool refuses to initialize SCSI disks without some kind of ROM identifying it as one of theirs, which seems to have been dropped by the later IDE days.

For the most part I have chosen to ignore the desktop on PCs in preference to a home directory. I’ve known people who cover the Windows desktop in icons all over. Mine has largely been spartan since I focused on UNIX systems, and since XP tried to make multiple users suck less on shared home PCs.

Classic MacOS on the other hand makes it curiously inescapable. It actually feels more like a “Shelf” to me than a desktop. Because its behavior is not like desktops that I am used to. On most “Desktop” operating systems that I’ve used: the actual desktop was simply a special folder. If you stuff a file on it the only difference from any other is not needing a file manager or a bunch of tabs or clicks to reach it later because you’ll just be moving windows out of the way to see it or using a shortcut to navigate there.

I’ve found that moving files from floppy disk to desktop doesn’t move the file off the diskette, so much as it seems to flag it as part of the desktop. Moving it somewhere else then generates the kind of I/O event other platforms do. Further when booting from other media: the desktop is subsumed into the current session. I.e. boot off a Disk Utils floppy and you’ll still see the desktop, but the icons for your HDD and floppy will have switched positions. That’s actually kind of cool in my humble opinion.

On the flipside the trash seems to work similarly. Trashing files off a floppy does not return the space, but unlike some platforms does send it to the trash rather than forcing a unix style deletion.

When working with the desktop and your hard drive: placing data on the desktop seems to be treated like the root of the drive. Opening a file info dialog will show a path like “MacHD: My Folder or File”, and you won’t see it in the actual drive: just the desktop. One thing that made this apparent to me is the option to default to a “Documents” folder for the file open/save dialogs. System 7.5 created a Documents folder on my desktop but it doesn’t appear in MacHD despite the path shown in Get Info. I opted to leave an alias on the desktop and move the original into the HDD view, reflecting how I found the file system from my Wallstreet’s MacOS 9.2.2 install.

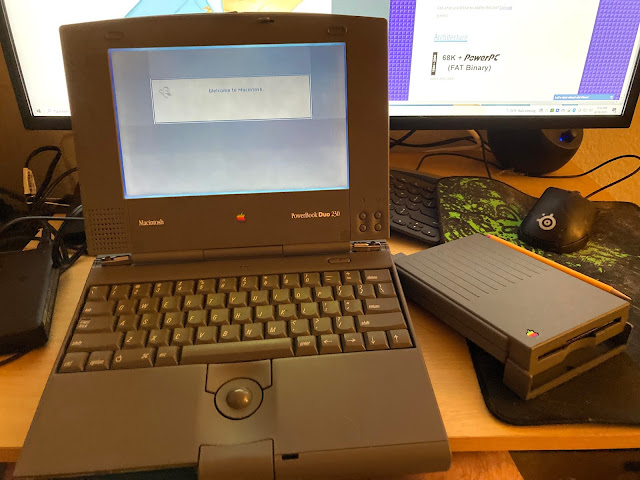

At a more general level is the feeling that Apple’s designers really did not believe in the keyboard. There are shortcuts for many common tasks, but when it comes to manipulating text the system UI has been use the mouse or piss off. Even simple behaviors we now take for granted like shift+arrow to select text do not exist in System 7.5. Fortunately, I actually like the trackball :P.